The Ethics and Regulation of Autonomous Vehicles

The arrival of autonomous vehicles (AVs), also known as self-driving cars, promises to transform the way we travel. But while the technology behind these vehicles is advancing quickly, the questions surrounding their ethical implications and regulation are still very much up for debate. This article explores these key issues, breaking down the ethical dilemmas, regulatory challenges, and what society needs to consider as autonomous vehicles become part of our everyday lives.

What Are Autonomous Vehicles?

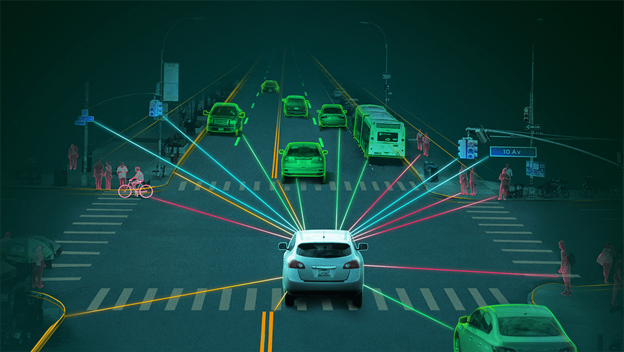

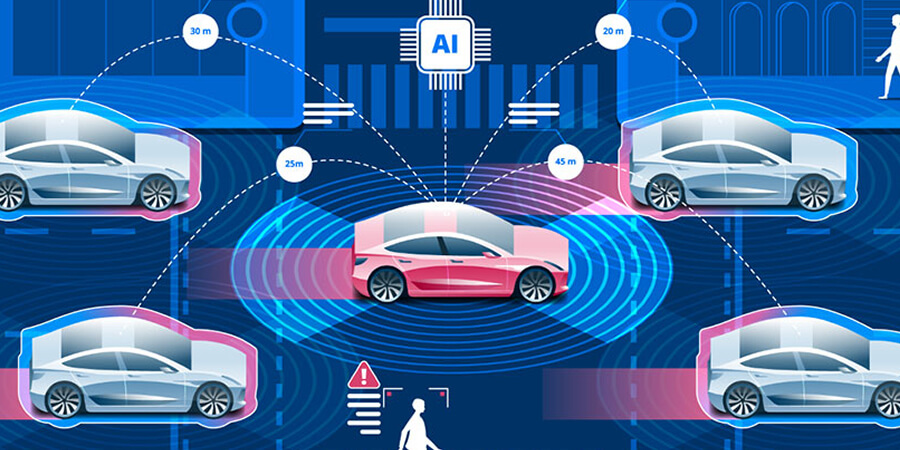

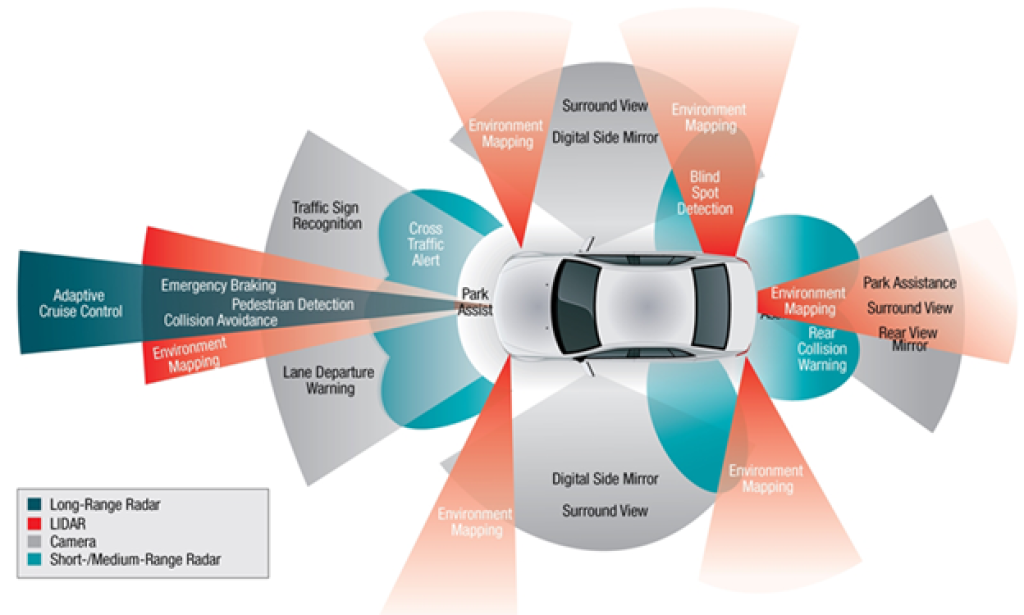

Autonomous vehicles are cars that can drive themselves without human input. These vehicles use sensors, cameras, and artificial intelligence (AI) to navigate the road, make decisions, and avoid obstacles. In theory, autonomous vehicles could make transportation safer, more efficient, and accessible. However, they also raise important questions about how they should behave in tricky situations and how we can ensure they are safe, fair, and beneficial for everyone.

Ethical Dilemmas of Autonomous Vehicles

1. The "Trolley Problem" in Self-Driving Cars

One of the most well-known ethical challenges when it comes to autonomous vehicles is the Trolley Problem. This thought experiment asks: If a self-driving car is in a situation where a crash is unavoidable, should it prioritize the safety of the passenger or the safety of pedestrians?

For example, if a car is about to hit a pedestrian, but swerving would cause harm to its own passengers, what should the vehicle do? Should it try to minimize overall harm by sacrificing the fewer lives (the passengers) to save more people (the pedestrians)? Or should it always prioritize the safety of the people inside?

The problem is that there is no clear answer, and different ethical principles (like utilitarianism or deontology) might suggest different solutions. Some people argue that self-driving cars should be programmed to always minimize harm, while others believe that cars should follow rules that protect human life in general, without making life-or-death decisions.

2. Accountability: Who is Responsible for Accidents?

Another ethical issue is accountability. If an autonomous vehicle is involved in an accident, who is responsible? Is it the car's manufacturer, the software developer, or the person who owns the car? Unlike traditional vehicles, where the driver is responsible for decisions, AVs make decisions based on programmed algorithms. This makes it unclear who should be held accountable for accidents.

For instance, if a self-driving car causes an accident because of a software glitch, should the company that made the software be liable? Or, if the car's sensors fail to detect an obstacle, should the manufacturer of those sensors take responsibility?

These questions highlight the need for clear laws and policies that define liability in the event of an accident.

3. Privacy and Data Security

Self-driving cars collect large amounts of data to function effectively. This includes information about the car’s surroundings, traffic patterns, and even the driver’s habits. While this data can make AVs safer and more efficient, it also raises privacy concerns.

Who owns the data generated by an autonomous vehicle? How is it used? And how can personal data be protected from hackers? For instance, if an AV is constantly collecting data about its passengers’ locations, routines, and personal preferences, this could be a privacy risk. People might not want their every movement tracked and stored.

These concerns suggest the need for strong regulations that protect users' privacy and ensure data security.

The Role of Regulation in Autonomous Vehicles

1. Ensuring Safety

Perhaps the most important role of regulation when it comes to autonomous vehicles is ensuring safety. Autonomous vehicles have the potential to reduce accidents caused by human error, which is responsible for the majority of traffic fatalities. However, AVs are not infallible. Software glitches, sensor malfunctions, and other issues can still cause crashes.

Governments must set clear safety standards for AVs, ensuring that they meet certain requirements before they are allowed on the road. These regulations would likely include testing protocols, crash safety standards, and guidelines for how AVs interact with other road users (such as cyclists and pedestrians).

Testing AVs in real-world conditions, while ensuring safety, is a complex challenge. Regulatory bodies will need to establish guidelines on how to evaluate autonomous vehicles under various driving conditions—such as rain, snow, or dense urban environments.

2. Setting Legal Standards

As autonomous vehicles become more common, there needs to be a clear legal framework that governs their use. For example, who is responsible when an AV is involved in an accident? Should it be the manufacturer, the software developer, or the person using the vehicle?

Laws will also need to address issues such as insurance for AVs, as well as laws related to vehicle registration, roadworthiness, and testing. Countries and regions may need to create new legal definitions for terms like "driver" and "vehicle operator," since autonomous cars won’t have human drivers in the traditional sense.

3. Balancing Innovation and Public Safety

Regulation of AVs must strike a delicate balance between encouraging innovation and ensuring public safety. On the one hand, governments want to support the development of new technologies, as they can lead to economic growth, job creation, and a safer transportation system. On the other hand, regulators must ensure that autonomous vehicles are thoroughly tested and safe before they are widely deployed on the roads.

Finding this balance is especially challenging because autonomous vehicles are a new technology. Lawmakers will need to take into account the rapid pace of innovation in the tech industry and adjust regulations as new advancements are made.

4. Equity and Accessibility

As autonomous vehicles become more widespread, there is a risk that the benefits of this technology may not be evenly distributed. For example, while AVs could provide better mobility for people with disabilities or those who are elderly, the cost of these vehicles may make them unaffordable for lower-income individuals.

Regulation should consider how autonomous vehicles can be made accessible and affordable to everyone. Additionally, governments might need to establish guidelines for ensuring that AVs are deployed in ways that promote fairness and prevent discrimination.

The Future of Autonomous Vehicles: What Lies Ahead?

The future of autonomous vehicles depends on how we address these ethical and regulatory challenges. With proper oversight, AVs have the potential to reduce traffic accidents, lower emissions, and improve mobility for people who may otherwise have difficulty driving.

However, society must ensure that the introduction of self-driving cars is done in a way that is ethical, fair, and beneficial to everyone. This will require collaboration between governments, tech companies, ethicists, and the public. Continuous dialogue and thoughtful regulation will be necessary to guide the development of autonomous vehicles in a responsible direction.

Conclusion.

Autonomous vehicles are no longer a distant dream; they are becoming a reality. However, as they evolve, we must carefully consider the ethical dilemmas and regulatory challenges they present. Whether it's addressing issues like the "trolley problem," determining accountability in accidents, or ensuring privacy and data security, the road ahead will require careful thought and collaboration.

With clear ethical guidelines and well-structured regulations, autonomous vehicles can become a positive force for change in the transportation industry. However, ensuring their success will depend on thoughtful, fair, and adaptive policies that protect the public, support innovation, and foster trust in this transformative technology.

You must be logged in to post a comment.